Self-Driving Cars: Driver Attention

In a previous article, I talked about the exciting potential of self-driving cars and expressed my concerns that we’re entering a dangerous transition period as self-driving technologies are becoming available to consumers more rapidly than we can safely use them. In this article, I’d like to discuss these concerns in more detail.

The National Highway Traffic Safety Board (2013) has identified five levels of vehicle automation:

- Level 0: No Automation – The driver is in complete control.

- Level 1: Function-Specific Automation – Selected functions are automated, such as adaptive cruise control (which maintains distance from the vehicle you are following) or stability control. The driver remains in control.

- Level 2: Combined Function Automation – At least two primary control functions are automated and work together to share control with the driver. Tesla’s Autopilot is the best known example of Level 2 automation, although many manufacturers have also introduced, or are working hard to develop, Level 2 vehicles.

- Level 3: Limited Self-Driving Automation – Selected control functions are automated and work together to assume control of the vehicle in certain driving situations. “The driver is expected to be available for occasional control,” the NTSB states, “but with sufficiently comfortable transition time.”

- Level 4: Full Self-Driving Automation – Google is striving for Level 4: “The vehicle is designed to perform all safety-critical driving functions and monitor roadway conditions for an entire trip” (National Highway Traffic Safety Administration, 2013). In real-world testing, Google treats the cars as Level 3, with a driver ready to take control.

At Levels 2 and 3, the driver and car share control. As I discussed in the previous article, Level 2 cars are on the road, and the technology is in many ways quite impressive and eventually will be superior to human drivers. However, my concern is how well can the driver monitor a Level 2 or 3 vehicle and quickly take control if necessary.

It’s All About Attention

Human drivers are prone to distraction; we talk on the phone, text, interact with navigation systems, and even browse the web. Distractions aren’t necessarily electronic: we put on make-up, eat, talk with our passengers, and so on. All of these behaviors take our eyes, and our attention, off the road and off the task of driving.

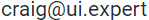

Cars have become safer and safer. Since 1929, the first year data is available, fatalities per million miles driven have steadily declined – until around 2008, when the trend line flattened out. The graph below shows the data in recent years. Many attribute flattening trend line to the prevalence of distracted driving. Cars themselves are safer, but we’re paying less attention as we drive, offsetting the benefits of safer technology.

Source: National Highway Traffic Safety Administration, 2015

Self-driving cars don’t get distracted – they pay attention all the time. This is a huge advantage, and one of the main reasons that self-driving cars will become safer than human drivers.

But we’re not there yet. Google is closest, but you can’t buy a Google car. You can buy a Tesla Model S, which is considered a Level 2 car. Some drivers report that Tesla’s Autopilot experience is initially stressful, but they adopt to the technology: “The first time I tried Autopilot, I switched it off after half a minute. My coworker… got a blood pressure headache after his first hour behind the wheel.This feeling persists the first couple times you try the system. But eventually you learn to relax” (Orlove, 2016). As some drivers become comfortable, they are treating the Model S as Level 3 car, and ceding control. This is the issue I discussed in the previous article.

As drivers become comfortable with a Level 2 car, they pay less attention. The Atlantic (LaFrance, 2015) reports on Google’s early experience with driver attention:

In 2012, when Google enlisted volunteers to test its new self-driving feature on the freeway, it made people sign contracts promising to stay engaged, even as the car drove itself. “We told them this was early-stage technology and that they should pay attention 100 percent of the time—they needed to be ready to take over driving at any moment,” Google wrote in a blog post. “They signed forms promising to do this, and they knew they’d be on camera.” And yet people didn’t pay attention even then… For Google, that experience prompted a pivot from developing incremental driver-assistance technologies to focusing on building a fully-autonomous Level 4 vehicle.

Researchers at Virginia Tech (LaFrance, 2015) found a significant impact on driver’s attention spans in self driving cars, meaning drivers spend more time looking away from the road. In another study, researchers from Stanford University put 48 students in the driver’s seat of a (simulated) self-driving car (Pritchard, 2015). Thirteen of them began to nod off.

Even if the driver remains awake, he or she still needs time to realize what is going on and take control of the vehicle – early research suggests as long as five seconds. At 60 miles per hour, the car will cover 1.5 football fields during that time.

Situational Awareness

There’s a concept from human factors called situational awareness. It refers to our perception of what is occurring in our environment, our comprehension of what we perceive, and our prediction of what might happen. For example, while driving, I see an approaching car and the side of the driver’s head, realize this means the driver has not seen me (i.e., not looked in my direction), and I momentarily lift off the gas, in case he pulls out in front of me.

Self-driving cars are not so good at situational awareness. Tesla’s Autopilot, for example, recognizes lane markings and other vehicles, but not road signs, stop lights, driver hand signals, and so on. Further, commercially available vehicles are not so good at making decisions based on what they do recognize, especially in unusual circumstances. As those capabilities improve, cars will attain the safety of Level 4. But today the driver needs to maintain situational awareness, to pay attention when the car is seemingly in control. The researchers at Google, Virginia Tech, and Stanford did not always observe this behavior.

Situational awareness is further threatened if a task is highly automated (Wickens, Lee, Liu, & Gordon-Becker, 2003). Evidence from aviation points to a problem that may lie ahead for drivers. The Federal Aviation Agency Inspector General found that “pilots who typically fly with automation can make errors when confronted with an unexpected event or transitioning to manual flying” (FAA Office of the Inspector General, 2016). The crash of Air France Flight 477 provides an example. When the aircraft’s pressure probe, which senses speed, iced over, the autopilot automatically shut off, leaving pilots confused as to the state of the airplane and unaware that their Airbus A330 was in a stall. Because of their confusion, they failed to take the simple step to get out of the stall – lower the nose of the airplane (Slate, 2015). Will drivers in highly automated Level 3 cars be able to maintain situational awareness in analogous situations?I believe this is a real risk. Granted, piloting an aircraft is a more complex task that driving a car, but a pilot flying at 30,000 feed has time to deal with an unexpected situation. A driver may have less than a second to grab the wheel before his car crosses the center line.

Solutions

The ultimate solution is to make our way through this transition phase, to true Level 4 cars. Google recognizes the dangers of shared control and has not attempted to develop a Level 2 or 3 vehicle – they have gone straight to Level 4.But Google has deep pockets and can afford to take a long-term view.

Automakers, on the other hand, feel competitive pressure to bring Level 2 and 3 technologies to market, and are doing so whileadding features to monitor driver attention. Tesla will nag you to keep your hands on the wheel – a feature that seems quite unpopular based on comments on Tesla forums (another indication that many owners consider their Model S’s to be Level 3 vehicles). Many manufacturers are developing systems that will monitor driver’s eyes to determine whether that are looking at the road; Toyota has offered such a system since 2006. Mercedes Benz’s Attention Assist feature is even more complex and “takes note of over 70 parameters in the first minutes of a drive to get to know your unique driving style. As your journey continues, it can detect certain steering corrections that suggest the onset of drowsiness” (Mercedes-Benz, 2016).

These solutions are certainly likely to be helpful, and the net result of all these technologies will be safer vehicles at some point. It is just unclear whether we have reached that point and how increased driver inattention interacts with the increased safety potential offered by self-driving vehicles. That technology is very exciting and gets a lot of focus on the web and in the news, but I believe that equal attention should be paid to the risks of driver attention in this period of rapid change.

Dr. Craig Rosenberg is an entrepreneur, human factors engineer, computer scientist, and expert witness. You can learn more about Dr. Rosenberg and his expert witness consulting business at www.ui.expert

Bibliography

- FAA Office of the Inspector General. (2016). Enhanced FAA Oversight Could Reduce Hazards Associated with Increased Use of Flight Deck Automation. Washington, DC: Federal Aviation Administration.

- LaFrance, A. (2015, December 1). The High-Stakes Race to Rid the World of Human Drivers. Retrieved January 30, 2016, from The Atlantic: http://www.theatlantic.com/technology/archive/2015/12/driverless-cars-are-this-centurys-space-race/417672/

- Mercedes-Benz. (2016). Safety: Decades of preparation. Milliseconds of proof. Retrieved January 30, 2016, from www.mbusa.com: https://www.mbusa.com/mercedes/benz/safety

- National Highway Traffic Safety Administration. (2013). Preliminary Statement of Policy Concerning Automated Vehicles. Washington, DC: Preliminary Statement of Policy Concerning Automated Vehicles.

- National Highway Traffic Safety Administration. (2015, September 19). NCSA DATA RESOURCE. Retrieved September 19, 2015

- Orlove, R. (2016, January 27). How A Tesla With Autopilot Forced Us To Take The Road Trip Of The Past. Retrieved January 27, 2016, from Jalopnik: http://jalopnik.com/how-a-tesla-with-autopilot-forced-us-to-take-the-road-t-1752024377?rev=1453912136206

- Pritchard, J. (2015, November 30). When self-driving cars need help: Stanford study’s surprising finding. Retrieved January 30, 2016, from San Jose Mercury News: http://www.mercurynews.com/bay-area-news/ci_29183070/when-self-driving-cars-need-help-stanford-studys

- Slate. (2015, June 25). Air France Flight 447 and the Safety Paradox of Automated Cockpits. Retrieved January 26, 2016, from The Eye: Slate’s Design Blog: http://www.slate.com/blogs/the_eye/2015/06/25/air_france_flight_447_and_the_safety_paradox_of_airline_automation_on_99.html

- Wickens, C. D., Lee, J. D., Liu, Y., & Gordon-Becker, S. (2003). Introduction to Human Factors Engineering (2nd ed.). Upper Saddle River, NJ: Person Prentice Hall.